Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

Education

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

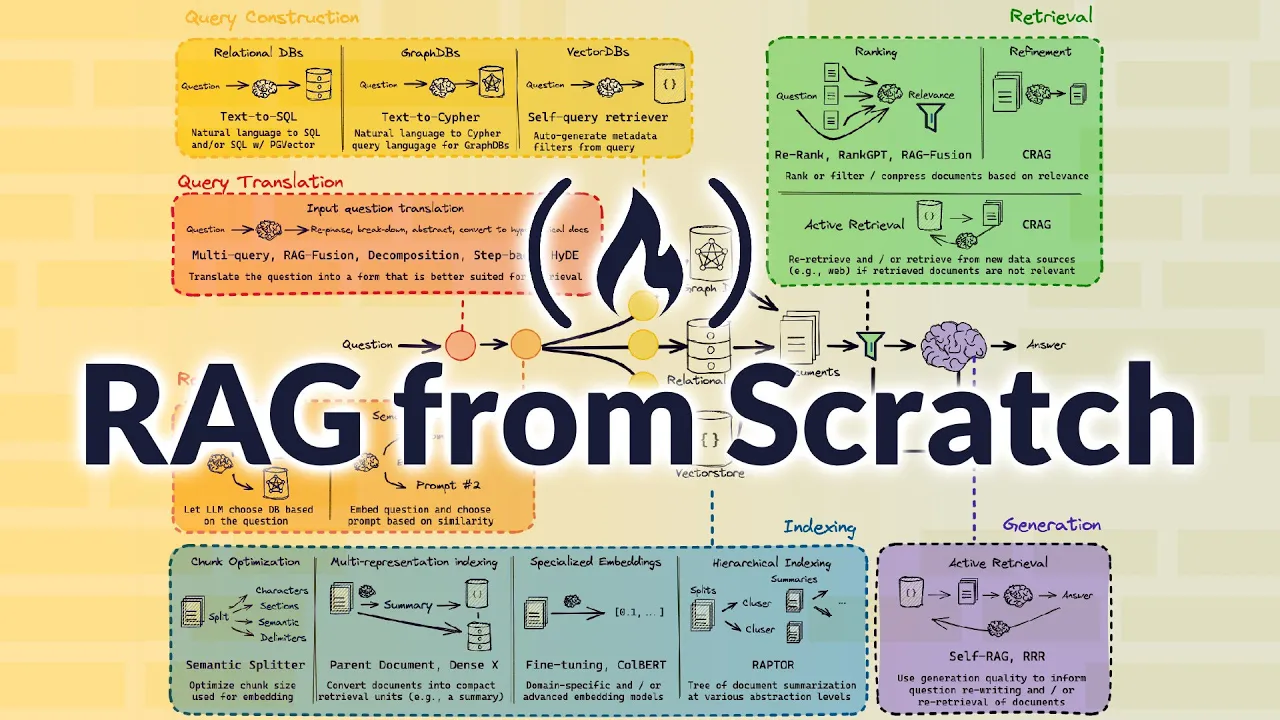

In this course, Lance Martin, a software engineer at LangChain, will teach you how to implement RAG (Retrieval-Augmented Generation) from scratch. LangChain is one of the most common ways to implement RAG, and Lance will help you understand how to use RAG to combine custom data with LLMs (Large Language Models).

Overview

Hi, this is Lance Martin. I'm a software engineer at LangChain. In this series, we'll dive deep into RAG or retrieval-augmented generation, which is critical for combining LLMs with external data sources. We’ll start by exploring the basics of RAG, its structure, and its foundational principles.

What is RAG?

RAG stands for retrieval-augmented generation. It works by indexing external data, retrieving relevant documents based on input queries, and generating answers grounded in this retrieved information. This technique is essential for accessing and leveraging large pools of private data that are not included in public LLM training datasets.

Understanding Context Windows

Recent advances have extended LLM context windows from 4,000 to a million tokens, allowing for extensive feeds of private data. This increased window size makes it feasible to supply LLMs with more extensive and detailed external information.

Key Concepts of RAG

1. Indexing

- Splitting Documents: Text documents are often split into smaller chunks to fit into LLM processing constraints.

- Embedding: Each document chunk is represented in a numerical vector format allowing for easy search and retrieval.

- Storing: Indexed document chunks are stored in vector databases for efficient access.

2. Retrieval

- Similarity Search: An embedded query from a user is used to search for similar document vectors.

- K-Nearest Neighbors (KNN): This algorithm retrieves the 'K' most similar documents to the query from the vector database.

3. Generation

- **Writing the Prompt:** A prompt template is defined to give a structure to the context and the question.

- LLM Integration: The retrieved documents are included in the LLM context window to generate responses.

- Response Parsing: The response generated by the LLM is parsed and presented back to the user.

Advanced RAG Techniques

Query Translation

- Multi-Query Generation: Rephrasing the input query to improve the chances of retrieving relevant documents.

- Decomposition: Breaking down complex questions into simpler sub-questions.

- Step-Back Prompting: Asking more abstract or generalized questions to improve retrieval quality.

Routing

- Logical Routing: Using the LLM’s internal logic to determine which data source to query.

- Semantic Routing: Embedding and comparing the similarity of queries to predefined prompts to choose the right source.

Query Construction

- Text-to-Metadata: Covering natural language into domain-specific filters for vector stores enabling precise and efficient queries.

Enhanced Indexing

- Multi-Representation Indexing: Creating multiple summaries of a document and indexing these summaries can improve the retrieval process.

- Hierarchical Indexing: Summarizing clusters of documents in a hierarchical structure ensures retrieval of relevant high-level context information.

RAG Evaluation

- Grading and Feedback: Implementing methods to evaluate retrieved documents and generated answers for relevance and correctness, reducing hallucinations, and retrying retrieval if needed.

Practical Implementation

Code Walkthroughs

- Examples and code snippets demonstrate each of these steps in action, using Python and popular libraries like LangChain, OpenAI, and Chroma.

- Real-world setup: Indexing real documents, writing effective prompts, integrating with different data sources, and running retrieval-generating pipelines.

Keyword

- RAG (Retrieval-Augmented Generation)

- LLM (Large Language Models)

- Context Windows

- Indexing

- Retrieval

- Generation

- Multi Query Generation

- Query Translation

- Logical Routing

- Semantic Routing

- Query Construction

- Enhanced Indexing

- Grading and Feedback

- LangChain

- OpenAI

- Chroma

FAQ

1. What is Retrieval-Augmented Generation (RAG)?

RAG involves indexing external data, retrieving relevant documents based on user queries, and generating responses grounded in these retrieved documents.

2. Why is the increased context window significant for RAG?

Context windows that are now as large as a million tokens allow LLMs to analyze more detailed and extensive batches of external data during processing.

3. How does multi-query generation improve document retrieval?

By rephrasing or decomposing the original query into multiple forms, it increases the likelihood that the retrieved documents will be relevant and useful.

4. What is the purpose of hierarchical indexing in RAG?

Hierarchical indexing clusters and summarizes documents, building a structure that allows the retrieval of summaries that integrate relevant information from multiple documents.

5. How do grading and feedback improve a RAG pipeline?

The implementation of evaluation and feedback mechanisms ensures that retrieved documents and generated answers are relevant and correct while minimizing hallucinations.

6. What libraries are frequently used for RAG implementation?

- LangChain, OpenAI, Chroma are commonly used in building and running RAG pipelines in practical settings.

7. Can RAG still be relevant in the age of large context LLMs?

Yes, as retrieval mechanisms can still provide precise, efficient access to specific information chunks and offer meaningful integration of external data sources.

This enriched markdown article, keywords, and FAQ aim to provide a comprehensive understanding of RAG implementation using Python as taught by Lance Martin, a LangChain Engineer.