Local Agentic RAG with LLaMa 3.1 - Use LangGraph to perform private RAG

Education

Local Agentic RAG with LLaMa 3.1 - Use LangGraph to perform private RAG

In this article, we will walk through how you can achieve agentic retrieval-augmented generation (RAG) using Meta’s new model, LLaMA 3.1, in combination with LangGraph. Additionally, the code will be written in a way that enables easy switching between LLaMa and an OpenAI model without altering a single line of code. This illustrates one of the strengths of LangChain, which significantly simplifies this process.

Initial Setup: Downloading olama

Download olama: Head over to the official GitHub repository of olama, and download the appropriate installer for your operating system. For instance, if you are on Windows, click on "Download for Windows" and proceed by following the installation steps.

Verify Installation: After installation, olama will automatically start a server running on Port 11434. To confirm it’s running, use

netstaton Windows PowerShell and filter for this port. Additionally, you can check the CLI by typingolama --helpto see all available commands.Download the LLaMa Model: Visit the olama website and navigate to the models section. Download the LLaMa 3.1 model version that fits your needs, such as the 8 billion parameter model. Use

olama runfollowed by the model command to download and interact with it directly from the CLI.

Preparing LangGraph and Vector Database

Setting Up the Project: Open your code editor (e.g., Visual Studio Code) and prepare a new Python notebook. Install the required dependencies by running

pip install -r requirements.txt.Dependencies: Import the necessary classes, including

ChatOlama,OlamaEmbeddings,ChatOpenAI, andOpenAIEmbeddings. Store your OpenAI API key in an environment variable for flexible switching between models.Creating Factory Functions: Define factory functions for both the LLM and embeddings that will dynamically select between LLaMa and OpenAI models based on an environment variable,

llm_type.Create a Vector Store: Using Chroma, create a vector database with sample documents relevant to a fictional restaurant. This database is essential for document retrieval based on user queries.

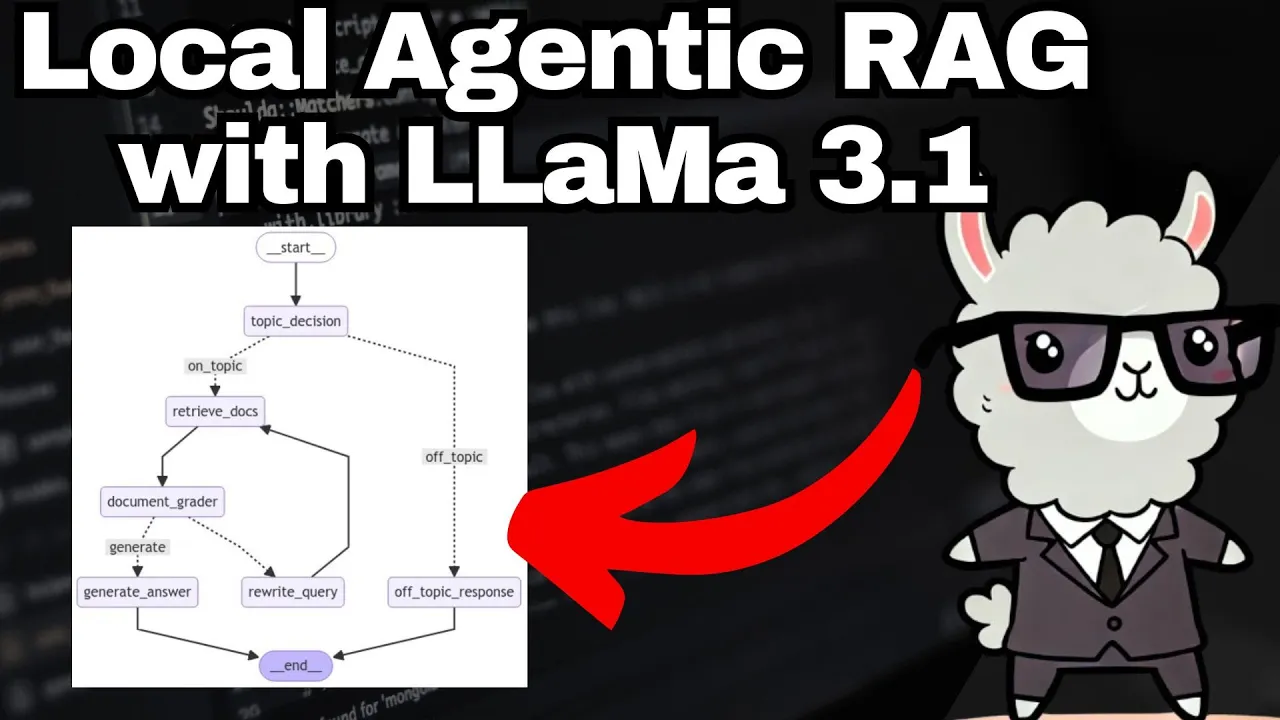

Building the Agent with LangGraph

Define Agent State: Create a custom agent state class inheriting from

TypedDict, including attributes like the question, document grades, LLM outputs, documents, and on-topic/off-topic statuses.Implementing Functions: Define functions for each node in the agent, including document retrieval, grading, and topic decision-making.

Document Grader: Establish a document grader class to evaluate the relevance of retrieved documents to answer the user's query effectively.

Routing Logic: Implement routing logic to handle on-topic and off-topic decisions, as well as whether to rewrite queries or generate final answers based on the retrieved documents' suitability.

Finalizing the Agent: Set up the agent using

StateGraph, adding nodes and conditional routing to handle off-topic and on-topic queries. Compile your graph and visualize it to ensure it follows the expected flow.

Testing and Comparison

Test Cases: Run test cases by inputting queries like "How is the weather?" and "Who is the owner of Bella Vista?" to check how well LLaMa 3.1 performs in providing accurate responses.

Switching to OpenAI: Modify your environment variable to switch to OpenAI (e.g., GPT-4 Turbo) and rerun the test cases to compare the performance and quality of responses between models.

Conclusion

The LangGraph setup facilitates agentic RAG using LLaMa 3.1 and provides a flexible framework to switch between models like OpenAI without code modification. This approach enhances the utility and flexibility of utilizing different language models in dynamic applications.

Keywords

- Agentic RAG

- LangGraph

- LLaMa 3.1

- OpenAI Models

- Vecto Database

- Document Grader

- llm_type

- olama

- LangChain

FAQ

Q1: What is LangGraph? A1: LangGraph is a framework used for constructing and managing complex workflows, typically involving language models.

Q2: How does switching between LLaMa and OpenAI models work?

A2: Switching is managed through a factory function that selects the model based on an environment variable llm_type.

Q3: Why should I use LangChain for this task? A3: LangChain allows for seamless switching and standardized interfaces between different language models, making it easy to compare performance and develop flexible applications.

Q4: What models of LLaMa are available for download? A4: LLaMa 3.1 is available in different parameter sizes, such as 8 billion, 45 billion, and 70 billion parameters.

Q5: What are the key steps in creating an agent using LangGraph? A5: Key steps include defining agent state, implementing functions for nodes, setting up routing logic, and compiling and testing the graph.

Q6: Where can I find the installation and setup details for olama? A6: Detailed instructions are available in the official olama GitHub repository, which has installation files and command examples.